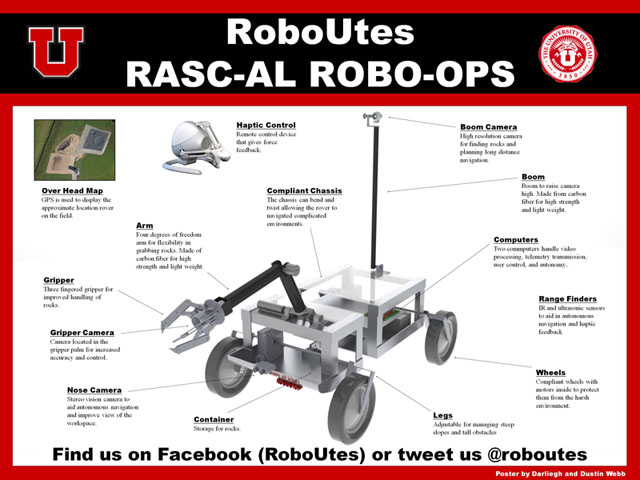

University of Utah RASC-AL ROBO-OPS Team

Published:

Mars rover competition

Published:

Mars rover competition

Published:

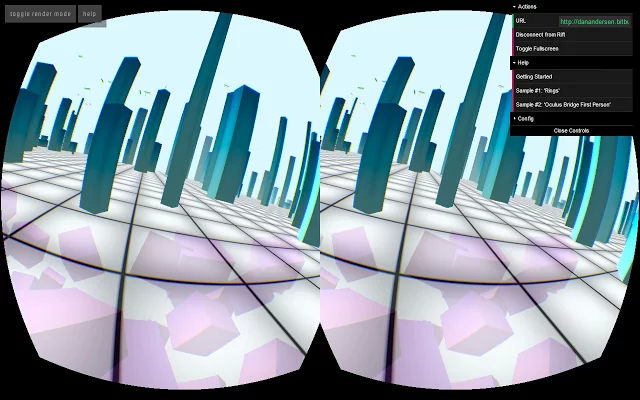

Cupola VR Viewer for Oculus Rift Head-Tracking in WebGL Virtual Environments

Published:

Final project for Purdue CS535

Published:

A multi-year interdisciplinary research project to improve surgical performance in austere environments using augmented reality

Published:

Presented at ISMAR 2019

Published in The Visual Computer, 2015

Existing telestrator-based surgical telementoring systems require a trainee surgeon to shift focus frequently between the operating field and a nearby monitor to acquire and apply instructions from a remote mentor. We present a novel approach to surgical telementoring where annotations are superimposed directly onto the surgical field using an augmented reality (AR) simulated transparent display. We present our first steps towards realizing this vision, using two networked conventional tablets to allow a mentor to remotely annotate the operating field as seen by a trainee. Annotations are anchored to the surgical field as the trainee tablet moves and as the surgical field deforms or becomes occluded. The system is built exclusively from compact commodity-level components—all imaging and processing are performed on the two tablets.

[PDF]

[Video]

Citation: Andersen D, Popescu V, Cabrera ME, Shanghavi A, Gomez G, Marley S, Mullis B, Wachs J. Virtual annotations of the surgical field through an augmented reality transparent display. The Visual Computer. 2016 Nov 1;32(11):1481-98. https://doi.org/10.1007/s00371-015-1135-6

Published in NextMed/MMVR22, 2016

Conventional surgical telementoring systems require the trainee to shift focus away from the operating field to a nearby monitor to receive mentor guidance. This paper presents the next generation of telementoring systems. Our system, STAR (System for Telementoring with Augmented Reality) avoids focus shifts by placing mentor annotations directly into the trainee’s field of view using augmented reality transparent display technology. This prototype was tested with pre-medical and medical students. Experiments were conducted where participants were asked to identify precise operating field locations communicated to them using either STAR or a conventional telementoring system. STAR was shown to improve accuracy and to reduce focus shifts. The initial STAR prototype only provides an approximate transparent display effect, without visual continuity between the display and the surrounding area. The current version of our transparent display provides visual continuity by showing the geometry and color of the operating field from the trainee’s viewpoint.

[PDF]

Citation: Andersen D, Popescu V, Cabrera ME, Shanghavi A, Gómez G, Marley S, Mullis B, Wachs JP. Avoiding Focus Shifts in Surgical Telementoring Using an Augmented Reality Transparent Display. In MMVR 2016 Apr 19 (Vol. 22, pp. 9-14).

Published in Surgery, 2016

Background: The goal of this study was to design and implement a novel surgical telementoring system called STAR (System for Telementoring with Augmented Reality) that uses a virtual transparent display to convey precise locations in the operating field to a trainee surgeon. This system was compared to a conventional system based on a telestrator for surgical instruction.

Methods: A telementoring system was developed and evaluated in a study which used a 1 x 2 between-subjects design with telementoring system, i.e. STAR or Conventional, as the independent variable. The participants in the study were 20 pre-medical or medical students who had no prior experience with telementoring. Each participant completed a task of port placement and a task of abdominal incision under telementoring using either the STAR or the Conventional system. The metrics used to test performance when using the system were placement error, number of focus shifts, and time to task completion.

Results: When compared to the Conventional system, participants using STAR completed the two tasks with less placement error (45% and 68%) and with fewer focus shifts (86% and 44%), but more slowly (19% for each task).

Conclusions: Using STAR resulted in decreased annotation placement error, fewer focus shifts, but greater times to task completion. STAR placed virtual annotations directly onto the trainee surgeon’s field of view of the operating field by conveying location with great accuracy; this technology helped to avoid shifts in focus, decreased depth perception, and enabled fine-tuning execution of the task to match telementored instruction, but led to greater times to task completion.

[PDF]

Citation: Andersen D, Popescu V, Cabrera ME, Shanghavi A, Gomez G, Marley S, Mullis B, Wachs JP. Medical telementoring using an augmented reality transparent display. Surgery. 2016 Jun 1;159(6):1646-53. https://doi.org/10.1016/j.surg.2015.12.016

Published in ISMAR, 2016

Hand-held transparent displays are important infrastructure for augmented reality applications. Truly transparent displays are not yet feasible in hand-held form, and a promising alternative is to simulate transparency by displaying the image the user would see if the display were not there. Previous simulated transparent displays have important limitations, such as being tethered to auxiliary workstations, requiring the user to wear obtrusive head-tracking devices, or lacking the depth acquisition support that is needed for an accurate transparency effect for close-range scenes.

We describe a general simulated transparent display and three prototype implementations (P1, P2, and P3), which take advantage of emerging mobile devices and accessories. P1 uses an off-the-shelf smartphone with built-in head-tracking support; P1 is compact and suitable for outdoor scenes, providing an accurate transparency effect for scene distances greater than 6m. P2 uses a tablet with a built-in depth camera; P2 is compact and suitable for short-distance indoor scenes, but the user has to hold the display in a fixed position. P3 uses a conventional tablet enhanced with on-board depth acquisition and head tracking accessories; P3 compensates for user head motion and provides accurate transparency even for close-range scenes. The prototypes are hand-held and self-contained, without the need of auxiliary workstations for computation.

[PDF]

[Video]

Citation: Andersen D, Popescu V, Lin C, Cabrera ME, Shanghavi A, Wachs J. A hand-held, self-contained simulated transparent display. In 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct) 2016 Sep 19 (pp. 96-101). IEEE. https://doi.org/10.1109/ISMAR-Adjunct.2016.0049

Published in VAR4Good, 2018

This paper presents two positions about the use of augmented reality (AR) in healthcare scenarios, informed by the authors’ experience as an interdisciplinary team of academics and medical practicioners who have been researching, implementing, and validating an AR surgical telementoring system. First, AR has the potential to greatly improve the areas of surgical telementoring and of medical training on patient simulators. In austere environments, surgical telementoring that connects surgeons with remote experts can be enhanced with the use of AR annotations visualized directly in the surgeon’s field of view. Patient simulators can gain additional value for medical training by overlaying the current and future steps of procedures as AR imagery onto a physical simulator. Second, AR annotations for telementoring and for simulator-based training can be delivered either by video see-through tablet displays or by AR head-mounted displays (HMDs). The paper discusses the two AR approaches by looking at accuracy, depth perception, visualization continuity, visualization latency, and user encumbrance. Specific advantages and disadvantages to each approach mean that the choice of one display method or another must be carefully tailored to the healthcare application in which it is being used.

[PDF]

Citation: Andersen D, Lin C, Popescu V, Munoz ER, Cabrera ME, Mullis B, Zarzaur B, Marley S, Wachs J. Augmented Visual Instruction for Surgical Practice and Training. In2018 IEEE Workshop on Augmented and Virtual Realities for Good (VAR4Good) 2018 Mar 18 (pp. 1-5). IEEE. https://doi.org/10.1109/VAR4GOOD.2018.8576884

Published in IEEE VR, 2018

We present a system that enables a novice user to acquire a large indoor scene in minutes as a collection of images that are sufficient for five degrees-of-freedom virtual navigation by image morphing. The user walks through the scene wearing an augmented reality head-mounted display (AR HMD) enhanced with a panoramic video camera. The AR HMD visualizes a 2D grid partitioning of a dynamically generated floor plan, which guides the user to acquire a panorama from each grid cell. The panoramas are registered offline using both AR HMD tracking data and structure-from-motion tools. Feature correspondences are established between neighboring panoramas. The resulting panoramas and correspondences support interactive rendering via image morphing with any view direction and from any viewpoint on the acquisition plane.

[PDF]

Citation: Andersen D, Popescu V. An AR-Guided System for Fast Image-Based Modeling of Indoor Scenes. In2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) 2018 Mar 18 (pp. 501-502). IEEE. https://doi.org/10.1109/VR.2018.8446560

Published in EuroVR, 2018

We present a system that enables a novice user to acquire a large indoor scene in minutes as a collection of images sufficient for five degrees-of-freedom virtual navigation by image morphing. The user walks through the scene wearing an augmented reality head-mounted display (AR HMD) enhanced with a panoramic video camera. The AR HMD shows a 2D grid of a dynamically generated floor plan, which guides the user to acquire a panorama from each grid cell. After acquisition, panoramas are preliminarily registered using the AR HMD tracking data, corresponding features are detected in pairs of neighboring panoramas, and the correspondences are used to refine panorama registration. The registered panoramas and their correspondences support rendering the scene interactively with any view direction and from any viewpoint on the acquisition plane. An HMD VR interface guides the user who optimizes visualization fidelity interactively, by aligning the viewpoint with one of the hundreds of acquisition locations evenly sampling the floor plane.

[PDF]

[Video]

Citation: Andersen D, Popescu V. HMD-Guided Image-Based Modeling and Rendering of Indoor Scenes. InInternational Conference on Virtual Reality and Augmented Reality 2018 Oct 22 (pp. 73-93). Springer, Cham. https://doi.org/10.1007/978-3-030-01790-3_5

Published in Annals of Surgery, 2019

Objective: This study investigates the benefits of a surgical telementoring system based on an augmented reality head-mounted display (ARHMD) that overlays surgical instructions directly onto the surgeon’s view of the operating field, without workspace obstruction.

Summary Background Data: In conventional telestrator-based telementoring, the surgeon views annotations of the surgical field by shifting focus to a nearby monitor, which substantially increases cognitive load. As an alternative, tablets have been used between the surgeon and the patient to display instructions; however, tablets impose additional obstructions of surgeon’s motions.

Methods: Twenty medical students performed anatomical marking (Task1) and abdominal incision (Task2) on a patient simulator, in 1 of 2 telementoring conditions: ARHMD and telestrator. The dependent variables were placement error, number of focus shifts, and completion time. Furthermore, workspace efficiency was quantified as the number and duration of potential surgeon-tablet collisions avoided by the ARHMD.

Results: The ARHMD condition yielded smaller placement errors (Task1: 45%, P < 0.001; Task2: 14%, P = 0.01), fewer focus shifts (Task1: 93%, P < 0.001; Task2: 88%, P = 0.0039), and longer completion times (Task1: 31%, P < 0.001; Task2: 24%, P = 0.013). Furthermore, the ARHMD avoided potential tablet collisions (4.8 for 3.2 seconds in Task1; 3.8 for 1.3 seconds in Task2).

Conclusion: The ARHMD system promises to improve accuracy and to eliminate focus shifts in surgical telementoring. Because ARHMD participants were able to refine their execution of instructions, task completion time increased. Unlike a tablet system, the ARHMD does not require modifying natural motions to avoid collisions.

[PDF]

Citation: Rojas-Muñoz E, Cabrera ME, Andersen D, Popescu V, Marley S, Mullis B, Zarzaur B, Wachs J. Surgical telementoring without encumbrance: a comparative study of see-through augmented reality-based approaches. Annals of surgery. 2019 Aug 1;270(2):384-9. https://doi.org/10.1097/SLA.0000000000002764

Published in ISMAR, TVCG, 2019

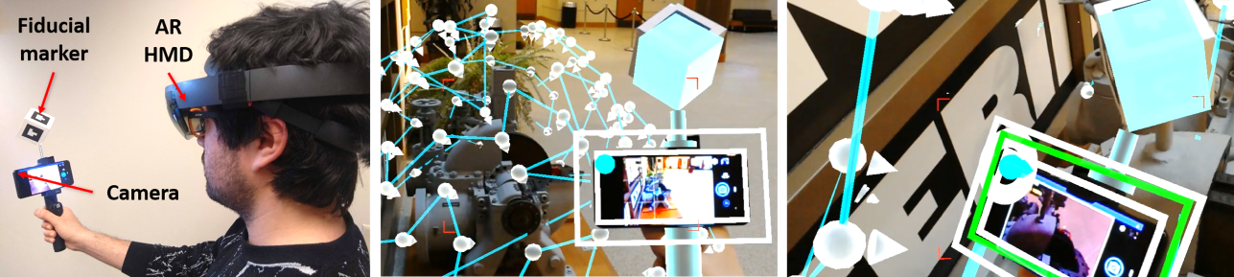

Photogrammetry is a popular method of 3D reconstruction that uses conventional photos as input. This method can achieve high quality reconstructions so long as the scene is densely acquired from multiple views with sufficient overlap between nearby images. However, it is challenging for a human operator to know during acquisition if sufficient coverage has been achieved. Insufficient coverage of the scene can result in holes, missing regions, or even a complete failure of reconstruction. These errors require manually repairing the model or returning to the scene to acquire additional views, which is time-consuming and often infeasible. We present a novel approach to photogrammetric acquisition that uses an AR HMD to predict a set of covering views and to interactively guide an operator to capture imagery from each view. The operator wears an AR HMD and uses a handheld camera rig that is tracked relative to the AR HMD with a fiducial marker. The AR HMD tracks its pose relative to the environment and automatically generates a coarse geometric model of the scene, which our approach analyzes at runtime to generate a set of human-reachable acquisition views covering the scene with consistent camera-to-scene distance and image overlap. The generated view locations are rendered to the operator on the AR HMD. Interactive visual feedback informs the operator how to align the camera to assume each suggested pose. When the camera is in range, an image is automatically captured. In this way, a set of images suitable for 3D reconstruction can be captured in a matter of minutes. In a user study, participants who were novices at photogrammetry were tasked with acquiring a challenging and complex scene either without guidance or with our AR HMD based guidance. Participants using our guidance achieved improved reconstructions without cases of reconstruction failure as in the control condition. Our AR HMD based approach is self-contained, portable, and provides specific acquisition guidance tailored to the geometry of the scene being captured.

[PDF]

[Video]

Citation: Andersen D, Villano P, Popescu V. AR HMD Guidance for Controlled Hand-Held 3D Acquisition. IEEE transactions on visualization and computer graphics. 2019 Aug 12;25(11):3073-82. https://doi.org/10.1109/TVCG.2019.2932172

Published in Surgery, 2020

Background: The surgical workforce particularly in rural regions needs novel approaches to reinforce the skills and confidence of health practitioners. Although conventional telementoring systems have proven beneficial to address this gap, the benefits of platforms of augmented reality-based telementoring in the coaching and confidence of medical personnel are yet to be evaluated.

Methods: A total of 20 participants were guided by remote expert surgeons to perform leg fasciotomies on cadavers under one of two conditions: (1) telementoring (with our System for Telementoring with Augmented Reality) or (2) independently reviewing the procedure beforehand. Using the Individual Performance Score and the Weighted Individual Performance Score, two on-site, expert surgeons evaluated the participants. Postexperiment metrics included number of errors, procedure completion time, and self-reported confidence scores. A total of six objective measurements were obtained to describe the self-reported confidence scores and the overall quality of the coaching. Additional analyses were performed based on the participants’ expertise level.

Results: Participants using the System for Telementoring with Augmented Reality received 10% greater Weighted Individual Performance Score (P = .03) and performed 67% fewer errors (P = .04). Moreover, participants with lower surgical expertise that used the System for Telementoring with Augmented Reality received 17% greater Individual Performance Score (P = .04), 32% greater Weighted Individual Performance Score (P < .01) and performed 92% fewer errors (P < .001). In addition, participants using the System for Telementoring with Augmented Reality reported 25% more confidence in all evaluated aspects (P < .03). On average, participants using the System for Telementoring with Augmented Reality received augmented reality guidance 19 times on average and received guidance for 47% of their total task completion time.

Conclusion: Participants using the System for Telementoring with Augmented Reality performed leg fasciotomies with fewer errors and received better performance scores. In addition, participants using the System for Telementoring with Augmented Reality reported being more confident when performing fasciotomies under telementoring. Augmented Reality Head-Mounted Display–based telementoring successfully provided confidence and coaching to medical personnel.

Citation: Rojas-Muñoz E, Cabrera ME, Lin C, Andersen D, Popescu V, Anderson K, Zarzaur BL, Mullis B, Wachs JP. The System for Telementoring with Augmented Reality (STAR): A head-mounted display to improve surgical coaching and confidence in remote areas. Surgery. 2020 Jan 6. https://doi.org/10.1016/j.surg.2019.11.008

Published in Purdue University Graduate School, 2020

Computer visualization can effectively deliver instructions to a user whose task requires understanding of a real world scene. Consider the example of surgical telementoring, where a general surgeon performs an emergency surgery under the guidance of a remote mentor. The mentor guidance includes annotations of the operating field, which conventionally are displayed to the surgeon on a nearby monitor. However, this conventional visualization of mentor guidance requires the surgeon to look back and forth between the monitor and the operating field, which can lead to cognitive load, delays, or even medical errors. Another example is 3D acquisition of a real-world scene, where an operator must acquire multiple images of the scene from specific viewpoints to ensure appropriate scene coverage and thus achieve quality 3D reconstruction. The conventional approach is for the operator to plan the acquisition locations using conventional visualization tools, and then to try to execute the plan from memory, or with the help of a static map. Such approaches lead to incomplete coverage during acquisition, resulting in an inaccurate reconstruction of the 3D scene which can only be addressed at the high and sometimes prohibitive cost of repeating acquisition.

Augmented reality (AR) promises to overcome the limitations of conventional out-of-context visualization of real world scenes by delivering visual guidance directly into the user’s field of view, guidance that remains in-context throughout the completion of the task. In this thesis, we propose and validate several AR visual interfaces that provide effective visual guidance for task completion in the context of surgical telementoring and 3D scene acquisition.

A first AR interface provides a mentee surgeon with visual guidance from a remote mentor using a simulated transparent display. A computer tablet suspended above the patient captures the operating field with its on-board video camera, the live video is sent to the mentor who annotates it, and the annotations are sent back to the mentee where they are displayed on the tablet, integrating the mentor-created annotations directly into the mentee’s view of the operating field. We show through user studies that surgical task performance improves when using the AR surgical telementoring interface compared to when using the conventional visualization of the annotated operating field on a nearby monitor.

A second AR surgical telementoring interface provides the mentee surgeon with visual guidance through an AR head-mounted display (AR HMD). We validate this approach in user studies with medical professionals in the context of practice cricothyrotomy and lower-limb fasciotomy procedures, and show improved performance over conventional surgical guidance. A comparison between our simulated transparent display and our AR HMD surgical telementoring interfaces reveals that the HMD has the advantages of reduced workspace encumbrance and of correct depth perception of annotations, whereas the transparent display has the advantage of reduced surgeon head and neck encumbrance and of annotation visualization quality.

A third AR interface provides operator guidance for effective image-based modeling and rendering of real-world scenes. During the modeling phase, the AR interface builds and dynamically updates a map of the scene that is displayed to the user through an AR HMD, which leads to the efficient acquisition of a five-degree-of-freedom image-based model of large, complex indoor environments. During rendering, the interface guides the user towards the highest-density parts of the image-based model which result in the highest output image quality. We show through a study that first-time users of our interface can acquire a quality image-based model of a 13m $ imes$ 10m indoor environment in 7 minutes.

A fourth AR interface provides operator guidance for effective capture of a 3D scene in the context of photogrammetric reconstruction. The interface relies on an AR HMD with a tracked hand-held camera rig to construct a sufficient set of six-degrees-of-freedom camera acquisition poses and then to steer the user to align the camera with the prescribed poses quickly and accurately. We show through a study that first-time users of our interface are significantly more likely to achieve complete 3D reconstructions compared to conventional freehand acquisition. We then investigated the design space of AR HMD interfaces for mid-air pose alignment with an added ergonomics concern, which resulted in five candidate interfaces that sample this design space. A user study identified the aspects of the AR interface design that influence the ergonomics during extended use, informing AR HMD interface design for the important task of mid-air pose alignment.

[PDF]

Citation: Andersen DS. Effective User Guidance through Augmented Reality Interfaces: Advances and Applications (Doctoral dissertation, Purdue University Graduate School). https://doi.org/10.25394/PGS.12184701